The Minor Area Motion Imagery (MAMI) dataset contains imagery from a large format electro-optical airborne platform and four ground based video cameras. The data was collected August 2013 during a AFRL/RYA picnic at Wright Patterson Air Force Base (WPAFB) Ohio.

The sample image above has been scaled to ten percent of its original size and was registered together with the 200 mm cameras in the center and the 50 mm cameras on the outside. The raw data is available hence registration is optional. In addition the area outside of WPAFB is blacked out to insure privacy of US citizens.

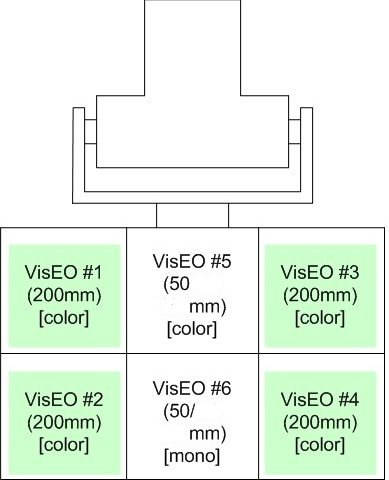

The sensor is a large format electro-optical sensor comprised of a matrix of six cameras. The matrix is 2 rows by 3 columns. Cameras 1, 5, and 3 make-up the top row of the image, respectively. Cameras 2, 6, and 4 make-up the bottom row of the image. Each camera was oriented in such way as to maximize coverage, yet allow enough overlap between images to help in mosaicking the image to form a larger image. The cameras, for this data collection, collect data at approximately 15 frames per second.

The gimbal layout is oriented as if a human was looking into the cameras.

Cameras 1, 2, 3, and 4 are 200 mm lenses while the inside cameras 5 and 6 are 50 mm lenses. All cameras except for camera 6 are color. The data is stored in a standard lossless png file format.

Cameras 1 - 5 are Prosilica GE2040C cameras while camera 6 is a GE2040, the cameras have the following specifications:

| Interface | IEEE 802.3 1000baseT |

| Resolution | 2040 x 2048 |

| Sensor | OnSemi KAI-04022 |

| Sensor Type | CCD Progressive |

| Sensor Size | Type 1.2 |

| Cell Size | 7.4 um |

| Frame Rate | 15 fps |

The ground based video cameras are Panasonic HDC-SD9 cameras which were installed on tripods. The ground based video cameras have the following specifications:

| Make and Model | Panasonic HDC-SD9 |

| Resolution | 1920x1080 |

| Ground Sample Distance | 0-5 cm cross range pixel spacing |

| Frame Rate | 29.97 fps |

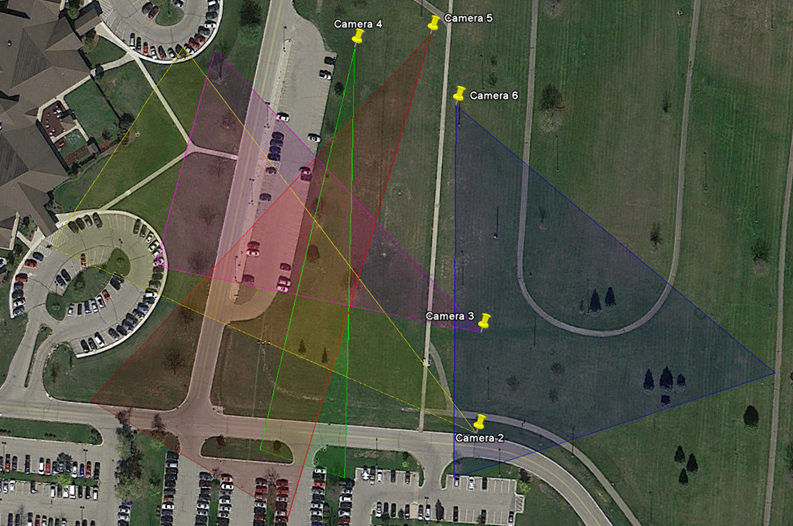

The fields of view are provided below mapped on a satellite image for reference.

A sample image from each of the four ground based cameras.

All data was synchronized to a common time scale with errors less than 0.1 s. Each ground video camera video file has a corresponding XML metadata file, which includes the start time of the file as well as the position of the camera (which does not move over time). These cameras use a frame rate of 29.97 fps, which was verified to be accurate enough to use as a synchronization mechanism. The airborne MAMI data includes a set of frames with one XML metadata file per set. This file contains a record for each frame, giving the UTC time of the frame. Additionally, each MAMI frame was registered to a geo-coordinate system via homography. This homography was used to generate image corners for each frame, which is included in the frame's record in the XML metadata file.

The data collected for this data set contains the following information:

A variety of objects in the scene were tracked by humans. These tracks are provided in the data set. The time column in the truth file corresponds to the time of the MAMI frame that was used to generate the track point, which is available in the MAMI XML metadata. This truth file includes the follwing fields: id, trackId, target_type, color, time, frame, latitude, longitude, pointType, reg_x, reg_y, reg_fileId, raw_x, raw_y, and raw_fileId. The reference data is stored in s3://sdms-mami-i/mami_track_truth_corrected_time.zip

The data is organized into five directories called "scene observation collections" (SOCs). SOCs 1 - 4 are seven minutes of data each while SOC 5 is five minutes worth of data. The total data set is thirty three minutes long. Each SOC directory then has a mami-X directory where x is the camera number. Each mami-X directory contains a metadata.xml file which contains a record for each frame, giving UTC time of the frame. Additionally, each MAMI frame was registered to a geo-coordinate system via homography. This homography was used to generate image corners for each frame, which are included in the frame's record in the metadata file.

The AFRL/Sensors Directorate is interested in novel research using this dataset, especially novel approaches to:

If you publish results based on this data please send a reference to sdms_help@vdl.afrl.af.mil so we can update the bibliography section of this page.

In the computer vision community, great strides have been made in the last decade, at least partially owing to the development of complex standard labeled datasets and challenges such as PASCAL VOC and ImageNet LSVRC. While the advances in object detection, localization, and recognition are undeniable, most approaches are oriented toward understanding the data itself, rather than the physical scene that the data describes. There is certainly utility in this viewpoint and the algorithms developed in response to these challenges will undoubtedly be essential pieces of sensing systems for many years, but more comprehensive representations are needed when dealing with multiple viewpoints and multiple sensing modalities. In particular, coherence across sensing streams of the representations of objects and their relationships is vitally important. In this context, we provide a scene understanding challenge problem with an adaptive performance estimation testing harness based on a querying system, called adaptive software for query-based evaluation (ASQE), intended to spur advances in unified representations and reasoning.

Fore more information on the challenge problem please see:

MAMI sample data products are available immediately at no cost on a DVD size of 4.8 GB.

Data product above is available for immediate DOWNLOAD.

If your project requires more data than that available from the sample DVD please see the section below on bulk data access.

The Minor Area Motion Imagery (MAMI) 2013 data is available from Amazon Simple Storage Service (S3) in Requester Pays buckets (i.e., you are charged by Amazon to access, browse, and download this data). Please see Requester Pays Buckets in the Amazon S3 Guide for more information on this service. Your use of Amazon S3 is subject to Amazon's Terms of Use. The accessibility of SDMS data from Amazon S3 is provided "as is" without warranty of any kind, expressed or implied, including, but not limited to, the implied warranties of merchantability and fitness for a particular use. Please do not contact SDMS for assistance with Amazon services.

The name of the S3 bucket is sdms-mami-i (s3://sdms-mami-i).

We do not track development of tools interacting with Amazon S3, nor endorse any particular tool. However, in development of this facility we found the Python package s3cmd to be useful on Mac OS X and Linux. For Windows the AWS team provided the following suggestions s3browser.com and cross ftp.

The examples developed below were developed and tested with Linux and Mac OS X using the Python distribution of Enthought Canopy. First you must establish a AWS account and download your AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY.

Export your key id and secret access key to the shell environment replacing the values of AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY with the values from your newly established AWS account:

export AWS_ACCESS_KEY_ID="JSHSHEUESKSK65"

export AWS_SECRET_ACCESS_KEY="kskjskjsAEERERERlslkhdjhhrhkjdASKJSKJS666789"

Install s3cmd with pip:

pip install s3cmd

To list the directory of the sdms-mami-i bucket use the following command:

s3cmd --requester-pays ls s3://sdms-mami-i/

If you want to get a directory listing of SOC1 simply use this command:

s3cmd --requester-pays ls s3://sdms-mami-i/SOC1/

To determine the amount of disk space in the SOC1 bucket use the command:

s3cmd --requester-pays --recursive --human du s3://sdms-mami-i/SOC1/

To retrieve a file:

s3cmd --requester-pays get s3://sdms-mami-i/SOC1/description.xml

We do not recommend that you use s3cmd sync capability because the number of files and size of the MAMI data set is large and errors will result.